Stand up for the facts!

Our only agenda is to publish the truth so you can be an informed participant in democracy.

We need your help.

I would like to contribute

Demonstrators carry signs and wave the Iranian flag as they rally outside the White House, June 22, 2025, in Washington, to protest the U.S. military strike on three sites in Iran. (AP)

Images and videos of explosions, fires, protests and weapons went viral after the United States’ June 21 attacks on three Iranian nuclear sites — but many of them didn’t show what was actually happening.

Instead, they were generated by artificial intelligence, taken out of context or recorded from video games or flight simulators. Many of them were shared by X accounts with blue check marks, which were formerly associated with accounts belonging to people or organizations with verified identities. (Now they can be purchased.)

It can be difficult to know at first glance on social media platforms whether fearmongering captions actually fit the photo or video you see; sometimes community notes programs add context, but sometimes they don’t.

PolitiFact fact-checked some of the misleading images and videos about the U.S. attack and reaction to it. Here’s a guide of what to avoid and tips about how to verify conflict imagery.

AI-generated imagery often contains visual inconsistencies

A blue-check account on X called "Ukraine Front Line" posted a video June 22 that shows lightning striking a plume of smoke, erupting in a fiery haze. The caption warned of nuclear war: "Please pay attention to the Lightning! This is the surest sign that the explosion was indeed nuclear."

Sign up for PolitiFact texts

But the video doesn’t show a real event. It was uploaded June 18 on YouTube with the caption "ai video." The account’s bio reads, "All videos on this channel are produced with artificial intelligence."

Some generative AI models allow people to write prompts to generate realistic videos, and the results are getting more sophisticated.

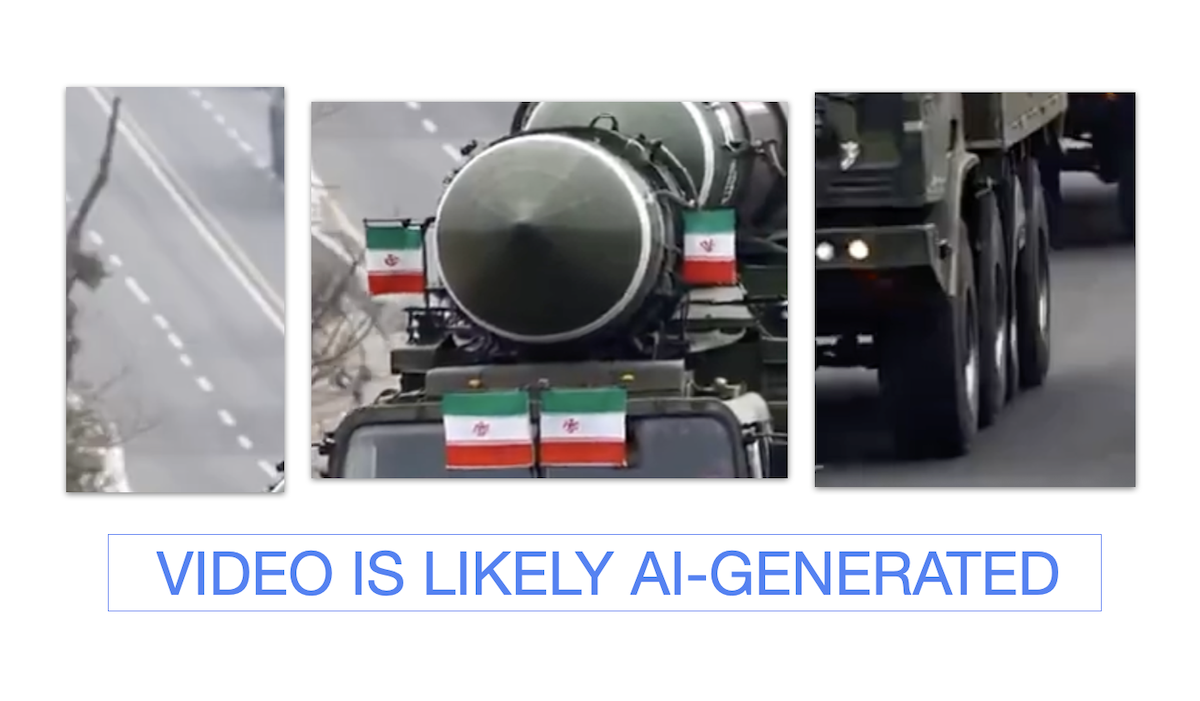

But they’re not perfect, and looking closely at the videos will frequently reveal visual inconsistencies. One video, captioned, "Getting ready for (tonight’s) strike," showed trucks with Iranian flags carrying missiles. A closer look showed the lines on the road were uneven, a tire on the second truck appeared to have a chunk ripped out of it, and the characters on the flags also didn’t match the real Iranian flag.

(Screenshots from X)

Hany Farid, a UC Berkeley professor who specializes in digital forensics, wrote on LinkedIn that many AI-generated videos often last eight seconds or less, or are composed of eight-second clips edited together. That’s because Google’s Veo 3, a text-to-video model, has an eight-second limit. Some videos have visible watermarks, but users can crop them out.

Misrepresented media has historically been more prominent than AI imagery during breaking news events. But during this war, both pro-Israel and pro-Iran accounts have shared AI imagery. Emmanuelle Saliba, chief investigative officer for the digital forensics company Get Real Labs, told BBC Verify it was the "first time we’ve seen generative AI be used at scale during a conflict."

Misinformers continue to share old images and videos out of context

Alongside the increasing use of AI by misinformers, there has been no shortage of images and videos being shared with the wrong context.

"Mass protests erupt across America as citizens take to the streets in outrage after the US launches attack on Iran," the caption of a June 22 X post read. But the video was uploaded June 14, the day of "No Kings" protests throughout the country.

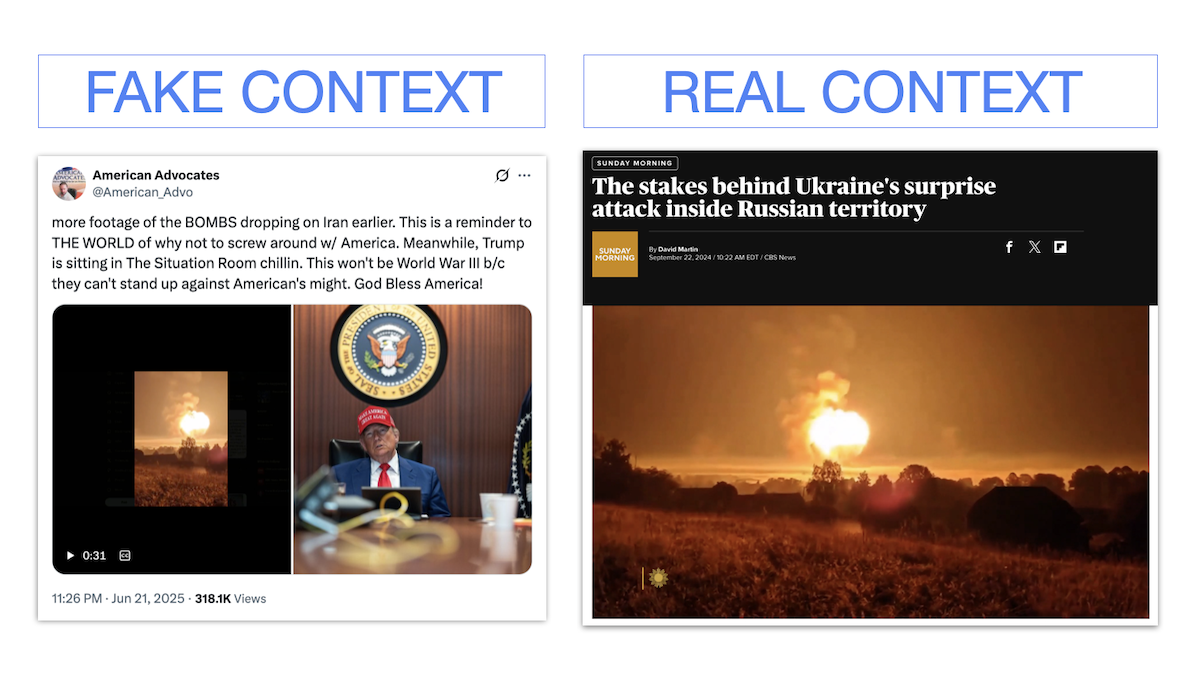

Another X video shared the night of the U.S. attacks showed a big plume of fire and smoke. "More footage of the BOMBS dropping on Iran earlier," read the video caption. But that’s false; the video came from a 2024 Ukrainian attack on Russia.

(Screenshots from X, CBS News)

A reverse image search can reveal whether a video has been posted online before, and what the original context was. Tools such as Google Lens and TinEye can show where images or videos were shared across platforms, who posted them and when the earliest post was made. Captions in images published by news outlets will likely show when and where they were taken.

Video game footage pulls from prior conflicts’ playbooks

Imagery recorded from flight simulators and military video games is also misrepresenting the conflict.

"This is the B2 Spirit Stealth Bomber taking off in California!" read a June 21 X post by a blue check account, before the attack on Iran.

Except it wasn’t a real B2 bomber. Checking the video’s watermark leads to a TikTok account which posted the video May 15 with the hashtag "#microsoftflightsimulator," a simulation game available on Xbox.

Gameplay from the video game Arma is also susceptible to misuse during armed conflict. PolitiFact fact-checked claims that used Arma footage during the Ukraine-Russia war and the Israel-Hamas war.

Keyword searches on video platforms such as YouTube can show if videos match video game footage that has previously been uploaded.

Simple ways to analyze accounts for trustworthiness

Checking an account’s profile and bio will often reveal whether or not it is credible. Many false and misleading claims came from accounts with the Iranian flag as their profile photo and display names that can be mistaken for giving legitimate news updates.

Such accounts include "Iran Updaes Commentary" (typo included), and "Iran News Daily Commentary." Another account — with a blue check — is named "Iran’s Reply."

(Screenshots from X)

But these aren’t affiliated with the Iranian government or any credible source. PolitiFact found that posts on these accounts gain hundreds of thousands if not millions of views, but contain low-quality content, such as AI-generated imagery.

Our Sources

Poynter, Why Community Notes mostly fails to combat misinformation, June 30, 2023

X post by Priya Purohit, June 22, 2025

Instagram post, June 14, 2025

X post by Ukraine Front Line, June 22, 2025

YouTube video by @cmlacyn, June 18, 2025

YouTube account, cemilacıyangallery, accessed June 22, 2025

LinkedIn post by Hany Farid, June 16, 2025

Google DeepMind, Veo, accessed June 23, 2025

PolitiFact, ‘Not the AI election’: Why artificial intelligence did not define the 2024 campaign, Dec. 19, 2024

BBC, Israel-Iran conflict unleashes wave of AI disinformation, June 20, 2025

X post by American Advocates, June 21, 2025

CBS News, The stakes behind Ukraine's surprise attack inside Russian territory, Sept. 22, 2024

X post by LynneP, June 21, 2025

TikTok post by charles_usanewyork, May 15, 2025

Semafor, Why a decade-old video game still fuels social media hoaxes, May 18, 2025

X post by Iran Updaes Commentary, June 22, 2025

Xbox Wire, Microsoft Flight Simulator 2024 is Available Now, Nov. 19, 2024

PolitiFact, No, this isn’t real footage of downed helicopters during an ambush, May 20, 2022

PolitiFact, Video clip of aircraft shot down is from video game, not Israel-Hamas conflict, Oct. 9, 2023

X post by Iran News Daily Commentary, June 22, 2025

X post by Iran’s Reply, June 22, 2025